Power of Scrapers!

- Apoorve Goyal

- Mar 21, 2020

- 2 min read

Spiders! Ah! They are so smart! Obviously , they crawl so swiftly. But, it's not a biology class. Right?

If true:

print 'Good Job Sir! Go ahead.'

else:

print 'You are all set, Sir. Just scroll down.'

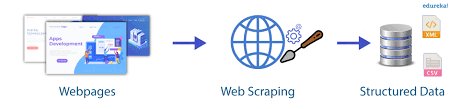

Web scraping, web harvesting, or web data extraction is data scraping used for extracting data from websites.

Suppose, you want a list of your favourite books from Amazon but when you hit enter, to your surprise, there are so many of them. Copy-Paste is the very first step one does here.Tedious, eh? So the question arises, is Scraping the same?

Oh yes! It is. It's too a Copy- Paste system, with a little bit coding.What work you had been doing manually, can be done with a simple code.Web scraping software may access the World Wide Web directly using the Hypertext Transfer Protocol, or through a web browser and perform a fetch-extract operation.

Python provides a variety of modules for this - BeautifulSoup, Scrapy (Spider) and Selenium.

I have written these tools in order of their increasing potentials to fetch data and get a json response, easily.

# Code snippet to extract title from www.quotes.toscrape.comimport scrapyclass QuoteSpider(scrapy.Spider): #scrapy.Spider class gives

cool stuff for scrapping data name = "quotes" start_urls={ 'https://quotes.toscrape.com' #pass list of urls to

extract def parse(self,response): #response contain the source code

of website title = response.css('title').extract() yield {'titletext' : title} #yield is used with a

generator, which scrappy

uses behind the scenes.The best part is, nowadays we have many extensions for scraping sites, without coding.

Google Chrome provides an extension - Web Scraper. Similarly, LinkedIn also has its own Scraper ' LinkedIn Helper'. You just need to provide the Start Url and define the sequence of crawling. You are done!

This is how a Web Scraper extension worksScraping is a need for all companies today,(mainly Startups). Buying a database from Azure, AWS, Oracle, etc., costs much. Therefore, to fulfil the requirements of data, Web Scrapers are always in need. Even, one can create his own database for Machine Learning projects with this skill. It won't be bragging to say that I had started working for a Startup when I didn't even know this term.

But believe me, it isn't that difficult.

Just Follow these :

You have the Recipe! What to wait for then?

Give it a try! :-)

Author

Apoorve Goyal

Comments